Using Synology DNS Server for testing

Nowadays many people use their phones as their primary web browsing device. As mobile usage is ubiquitous and increasing even more, testing the web applications on mobile platforms is becoming more important.

Chrome has a great emulator for mobile devices but sometimes it’s best to test your application on an actual phone.

If your application is the default application you can access via IP address you’re fine but the problem is if you have multiple domains you want to test at some point you’d need to enter the domain name in your phone’s browser.

Today I bumped into such an issue and my solution involved one of my favourite devices in my household: Synology DS214Play

Local DNS Server on Synology

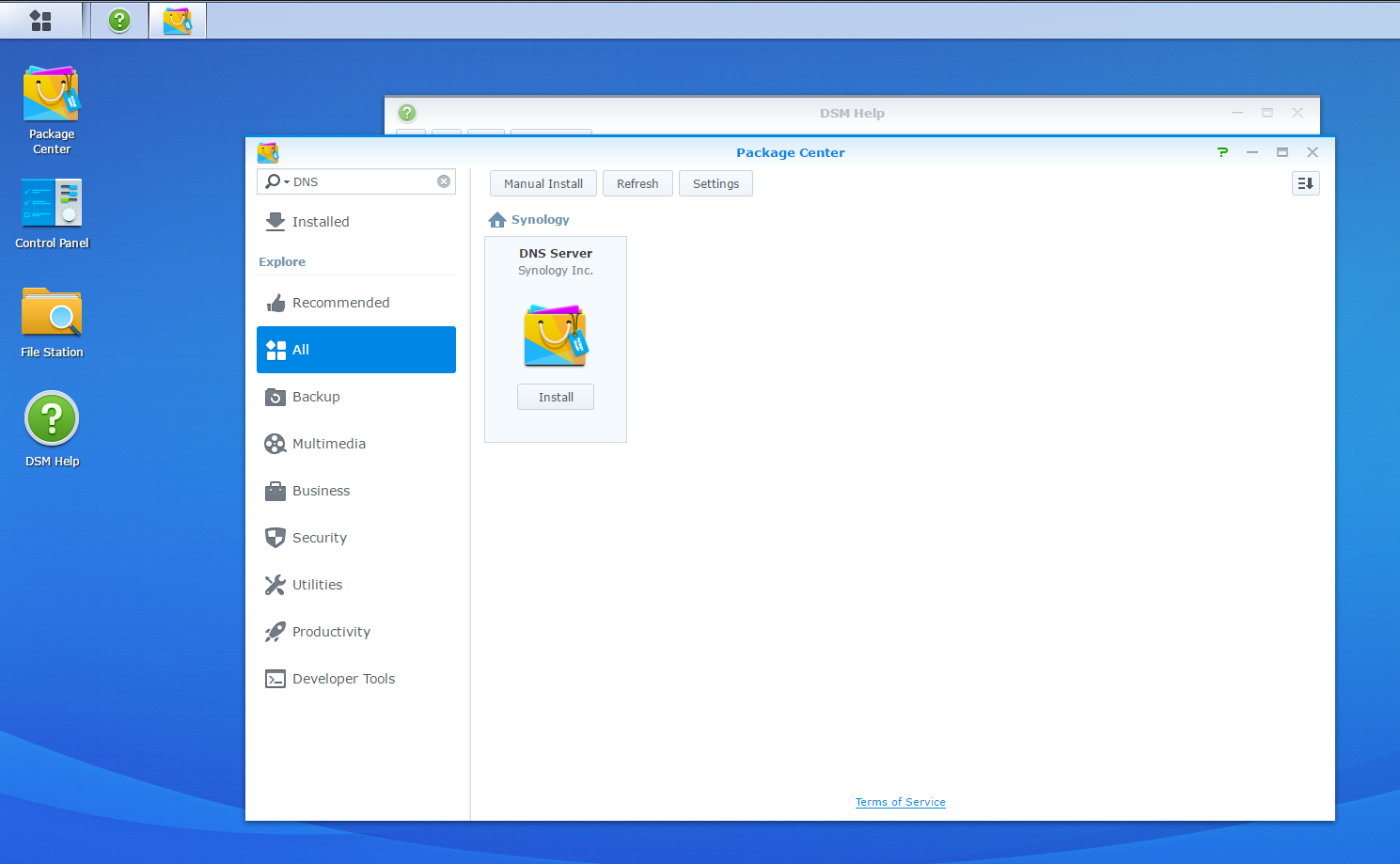

Step 01: First, I installed DNS Server package by simply searching DNS and clicking install on Package Center.

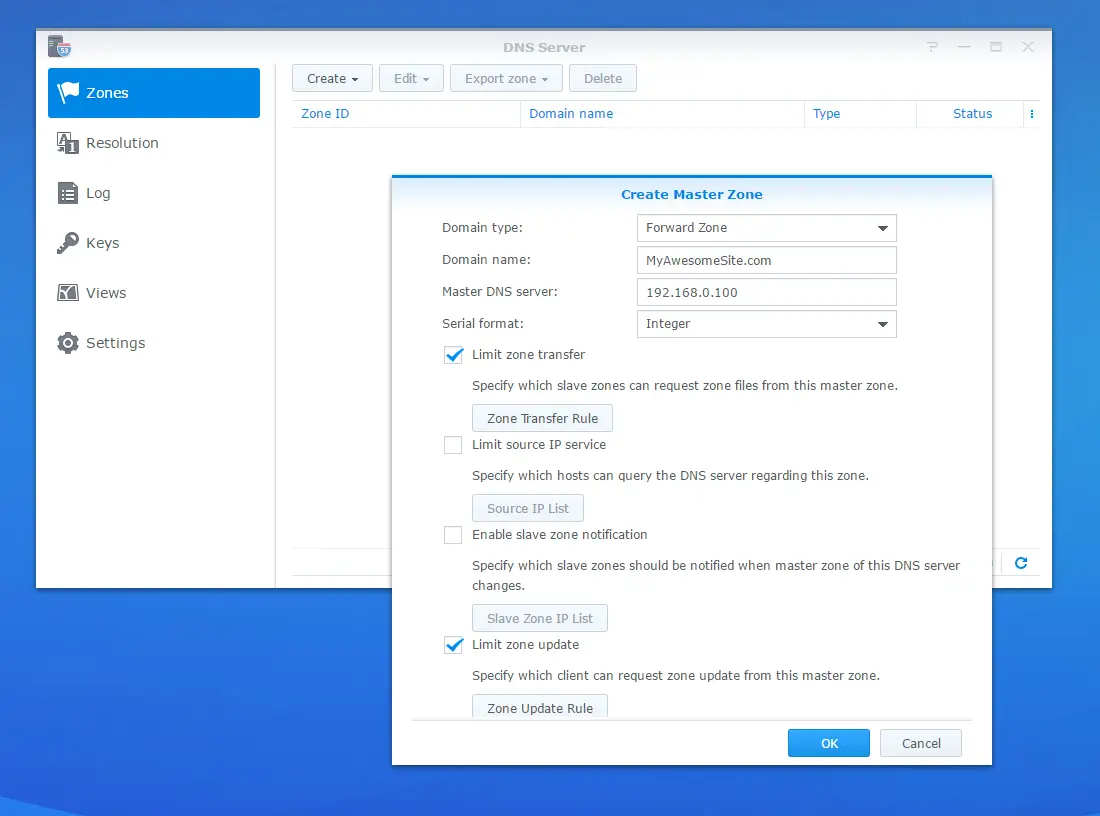

Step 02: Then, I opened the DNS Server settings and created a new Master Zone. I simply entered the domain name of my site which is hosted on IIS on my development machine and the local network IP address of the Synology as the Master DNS Server.

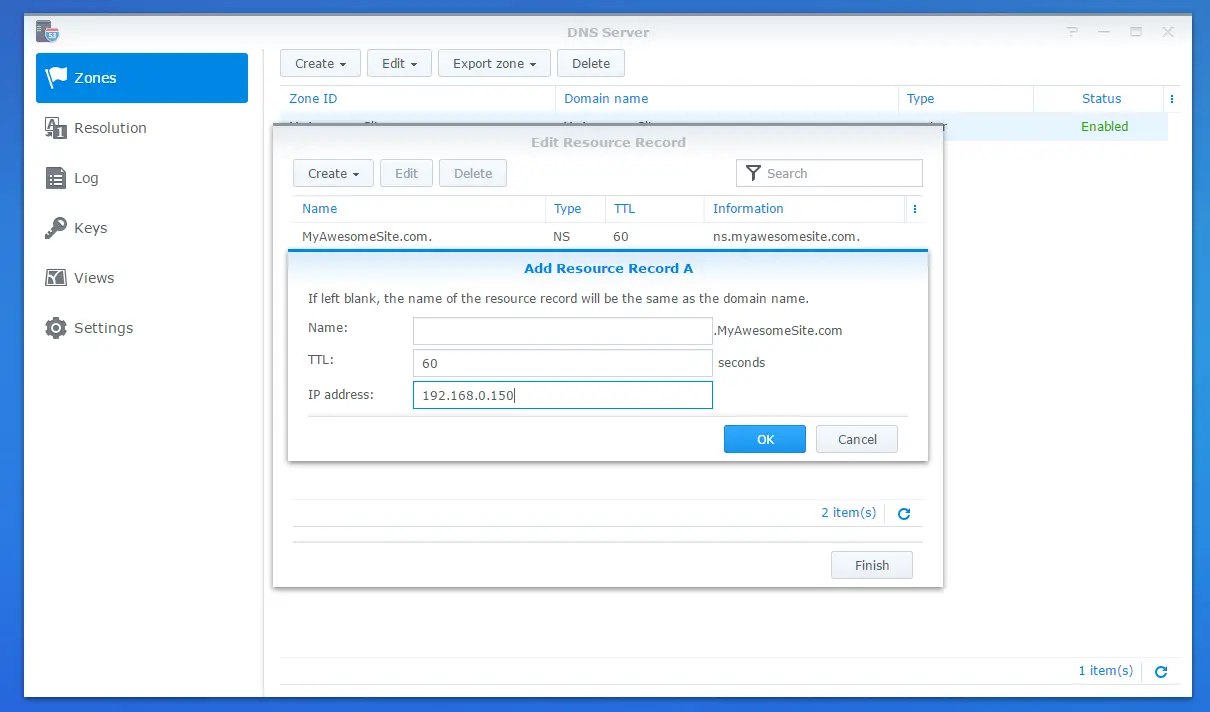

Step 03: Next, I needed to point to the actual web server. In order to do that I created an A record with the IP address of the local server a.k.a. my development machine.

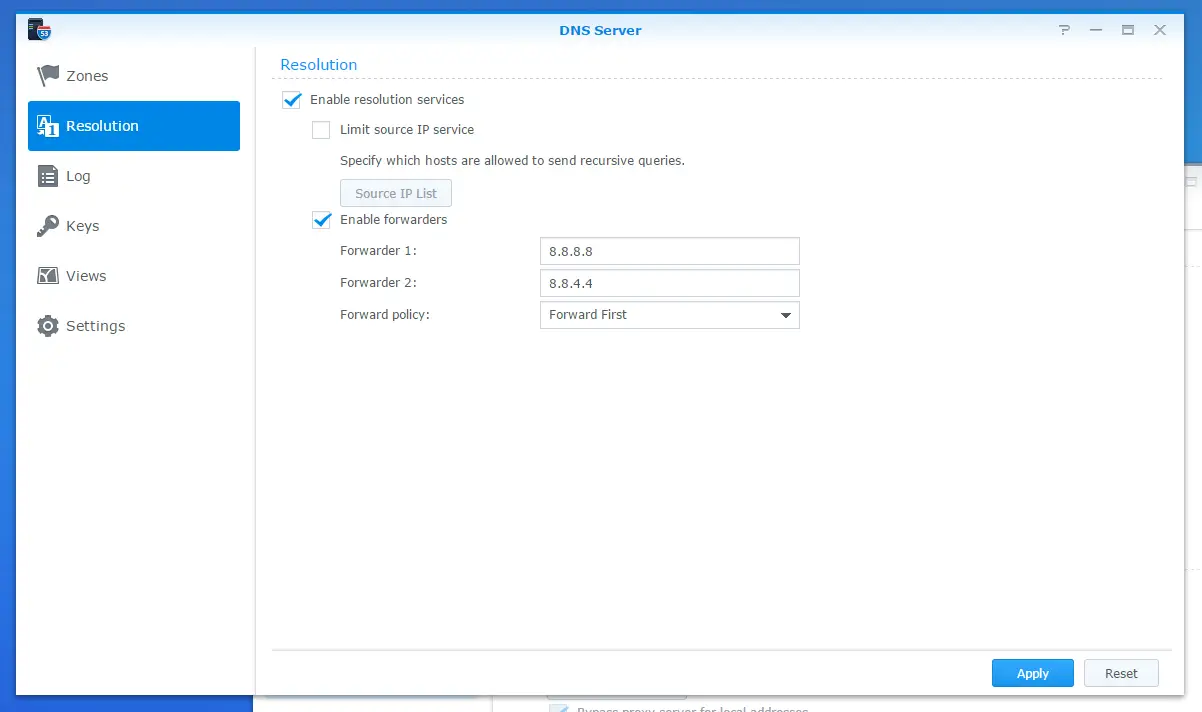

Step 04: For all the domains that my DNS server didn’t know about (which is basically everything else!) I needed to forward the requests to “actual” DNS servers. In my case I use Google’s DNS servers so I entered those IPs as forwarders.

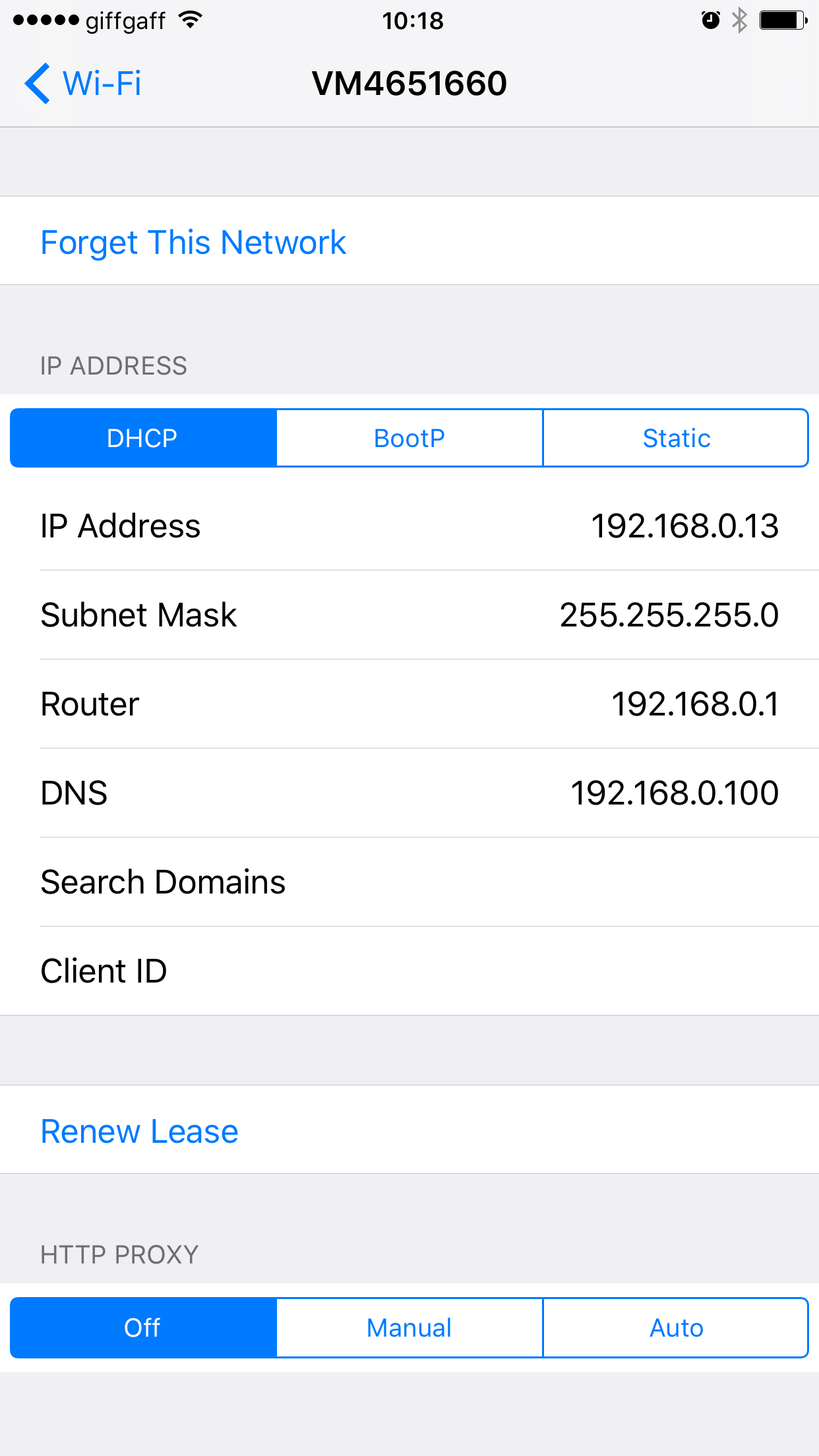

Step 05: At this point the Synology DNS server is pointing to the web server and web server is hosting the website. All is left is pointing the client’s (phone or laptop) DNS setting to the local DNS server.

Step 06: Now that it’s all setup I could access to my development machine using a locally-defined domain name from my phone:

Conclusion

Another simple alternative to achieve this on Windows laptops is to edit hosts file under C:\Windows\System32\drivers\etc folder but when you have multiple clients in the network i.e macbooks and phones, it’s simpler just to point to the DNS server rather than editing each and every single device. And also it’s more fun this way!