Adventures in F# - Part 4

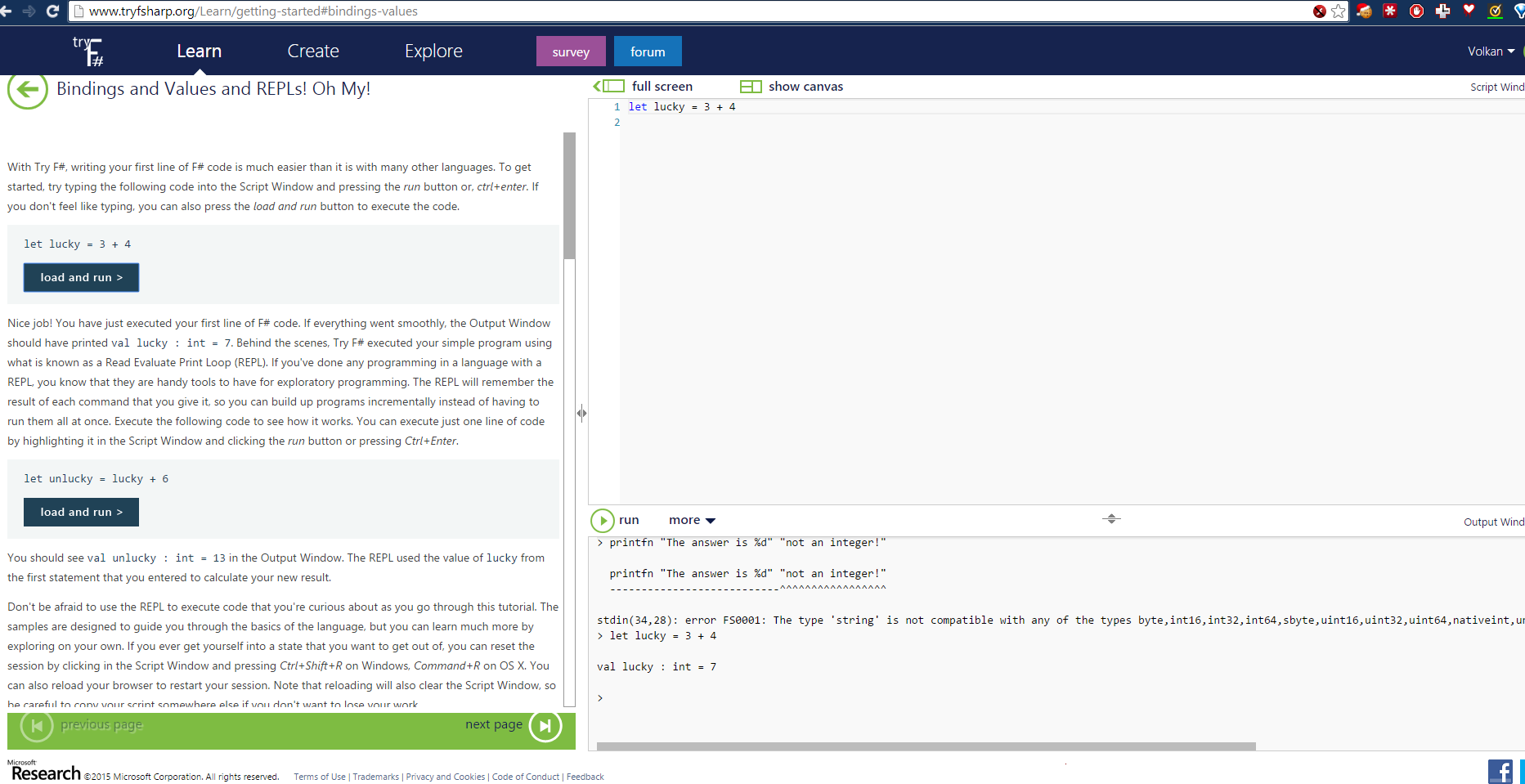

Moving on in the TryFSharp.org site today I finished the last two sections in the Advanced part.

Notes

-

Computational Expressions can be used to “alter the standard evaluation rule of the language and define sub-languages that model certain programming patterns”

The reason we can alter the semantics of the language is the F# compiler rewrites (de-sugars) computation expressions before compiling.

For example the code snippet below

type Age = | PossiblyAlive of int | NotAlive type AgeBuilder() = member this.Bind(x, f) = match x with | PossiblyAlive(x) when x >= 0 && x <= 120 -> f(x) | _ -> NotAlive member this.Delay(f) = f() member this.Return(x) = PossiblyAlive x let age = new AgeBuilder() let willBeThere a y = age { let! current = PossiblyAlive a let! future = PossiblyAlive (y + a) return future } willBeThere 38 150is de-sugared to this:

let willBeThere2 a y = age.Delay(fun () -> age.Bind(PossiblyAlive a, fun current -> age.Bind(PossiblyAlive (y+a), fun future -> age.Return(future)))) willBeThere2 38 80At this point this concept seems too complicated to sink my teeth into. TryFSharp.suggests further study of the following concepts to have a better understanding of computation expressions:

- The sophisticated architecture of function calls that are generated by de-sugaring

- Monads - the theory behind computational expressions

-

Quotations is a language feature “that enables you to generate and work with F# code expressions programmatically. This feature lets you generate an abstract syntax tree that represents F# code. The abstract syntax tree can then be traversed and processed according to the needs of your application. For example, you can use the tree to generate F# code or generate code in some other language.”

Conclusion

After dabbling 4 days in TryFSharp.org I think it’s time to move on. There are 4 more sections in the site but since I can only learn by building something on my own I’ll try to come up with a small project to use the basics. Otherwise it’s likely that all this information will be forgotten. It’s already very overwhelming and I need a small achievement to motivate myself.

So I leave TryFSharp.org at this point, for a while at least. Even though I don’t find the advanced topics well-explained it’s still a very nice resource to get started.