Recently I needed to simulate HTTP responses from a 3rd party. I decided to use Nancy to quickly build a local web server that would handle my test requests and return the responses I wanted.

Here’s the definition of Nancy from their official website:

Nancy is a lightweight, low-ceremony, framework for building HTTP based services on .Net and Mono.

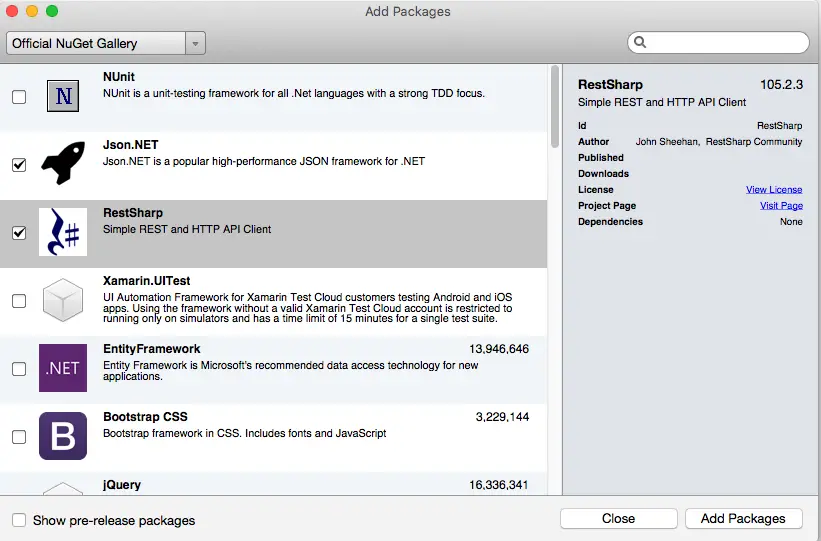

It can handle DELETE, GET, HEAD, OPTIONS, POST, PUT and PATCH requests. It’s very easy to customize and extend as it’s module-based. In order to build our tiny web server we are going to need self-hosting package:

Install-Package Nancy.Hosting.Self

This would automatically install Nancy as it depends on that package.

Self-hosting in action

The container application can be anything as long it keeps running one way or another. A background service would be ideal for this task. Since all I need is testing I just created a console application and added Console.ReadKey() statement to keep it “alive”

class Program

{

private string _url = "http://localhost";

private int _port = 12345;

private NancyHost _nancy;

public Program()

{

var uri = new Uri( $"{_url}:{_port}/");

_nancy = new NancyHost(uri);

}

private void Start()

{

_nancy.Start();

Console.WriteLine($"Started listennig port {_port}");

Console.ReadKey();

_nancy.Stop();

}

static void Main(string[] args)

{

var p = new Program();

p.Start();

}

}

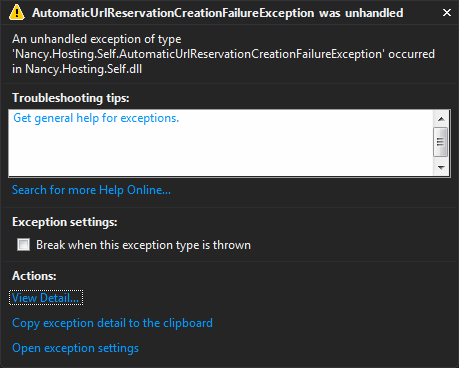

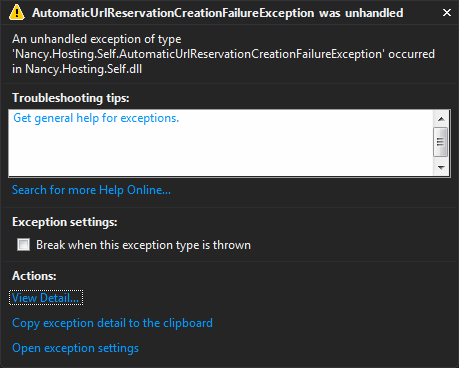

If you try this code, it’s likely that you’ll have an error (AutomaticUrlReservationCreationFailureException)

saying:

The Nancy self host was unable to start, as no namespace reservation existed for the provided url(s).

Please either enable UrlReservations.CreateAutomatically on the HostConfiguration provided to

the NancyHost, or create the reservations manually with the (elevated) command(s):

netsh http add urlacl url="http://+:12345/" user="Everyone"

There are 3 ways to resolve this issue and two of which are already suggested in the error message:

-

In an elevated command prompt (fancy way of saying run as administrator!), run

netsh http add urlacl url="http://+:12345/" user="Everyone"

What add urlacl does is

Reserves the specified URL for non-administrator users and accounts

If you want to delete it later on you can use the following command

netsh http delete urlacl url=http://+:12345/

-

Specify a host configuration to NancyHost like this:

var configuration = new HostConfiguration()

{

UrlReservations = new UrlReservations() { CreateAutomatically = true }

};

_nancy = new NancyHost(configuration, uri);

This essentially does the same thing and a UAC prompt pops up so it’s not that automatical!

-

Run the Visual Studio (and the standalone application when deployed) as administrator

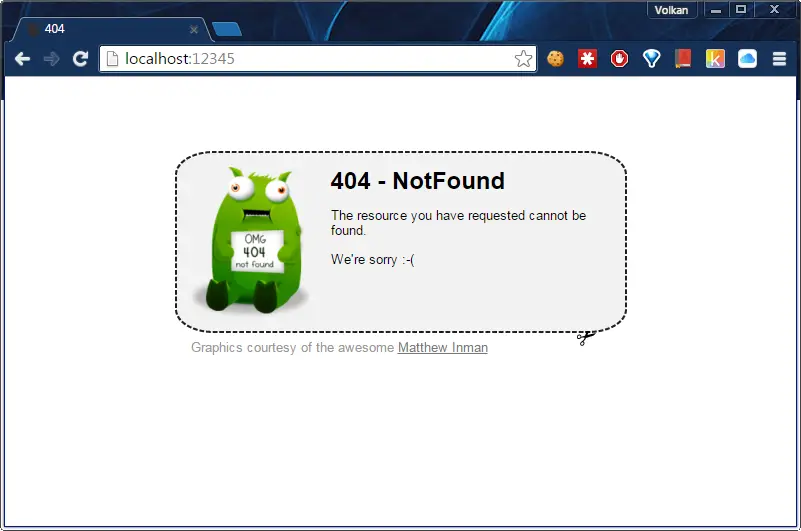

After applying either one of the 3 solutions, let’s run the application and try the address http://localhost:12345 in a browser and we get …

Excellent! We are actually getting a response from the server even though it’s just a 404 error.

Now let’s add some functionality, otherwise it isn’t terribly useful.

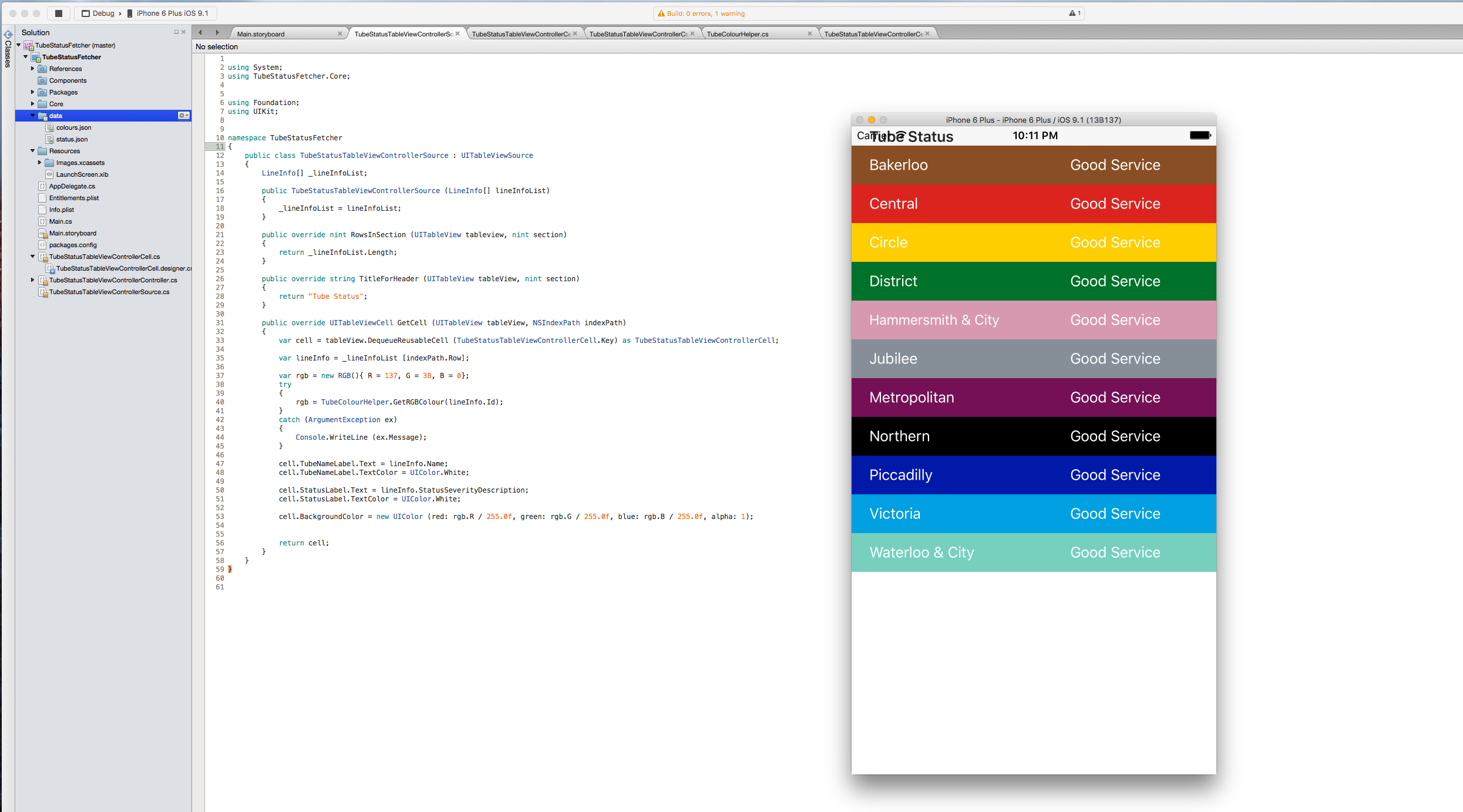

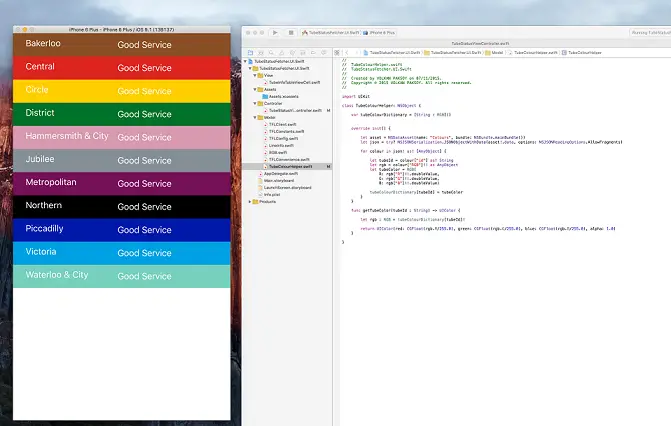

Handling requests

Requests are handled by modules. Creating a module is as simple as creating a class deriving from NancyModule. Let’s create two handlers for the root, one for GET verbs and one for POST:

public class SimpleModule : Nancy.NancyModule

{

public SimpleModule()

{

Get["/"] = _ => "Received GET request";

Post["/"] = _ => "Received POST request";

}

}

Nancy automatically discovers all modules so we don’t have to register them. If there are conflicting handlers the last one discovered overrides the previous ones. For example the following example would work fine and the second GET handler will be executed:

public class SimpleModule : Nancy.NancyModule

{

public SimpleModule()

{

Get["/"] = _ => "Received GET request";

Post["/"] = _ => "Received POST request";

Get["/"] = _ => "Let me have the request!";

}

}

In the simple we used underscore to represent input as didn’t care but most of the time we would. In that case we can get the request parameters as a DynamicDictionary (a type that comes with Nancy). For example let’s create a route for /user:

public SimpleModule()

{

Get["/user/{id}"] = parameters =>

{

if (((int)parameters.id) == 666)

{

return $"All hail user #{parameters.id}! \\m/";

}

else

{

return "Just a regular user!";

}

};

}

And send the GET request:

GET http://localhost:12345/user/666 HTTP/1.1

User-Agent: Fiddler

Host: localhost:12345

Content-Length: 2

which would return the response:

HTTP/1.1 200 OK

Content-Type: text/html

Server: Microsoft-HTTPAPI/2.0

Date: Tue, 10 Nov 2015 11:40:08 GMT

Content-Length: 23

All hail user #666! \m/

Working with input data: Request body

Now let’s try to handle the data posted in the request body. Data posted in the body can be accessed though this.Request.Body property such as for the following request

POST http://localhost:12345/ HTTP/1.1

User-Agent: Fiddler

Host: localhost:12345

Content-Length: 55

Content-Type: application/json

{

"username": "volkan",

"isAdmin": "sure!"

}

this code would first convert the request stream to a string and deserialize it to a POCO:

Post["/"] = _ =>

{

var id = this.Request.Body;

var length = this.Request.Body.Length;

var data = new byte[length];

id.Read(data, 0, (int)length);

var body = System.Text.Encoding.Default.GetString(data);

var request = JsonConvert.DeserializeObject<SimpleRequest>(body);

return 200;

};

If the was posted from a form for example and sent in the following format in the body

username=volkan&isAdmin=sure!

then we could simply convert it to a dictionary with a little bit of LINQ:

Post["/"] = parameters =>

{

var id = this.Request.Body;

long length = this.Request.Body.Length;

byte[] data = new byte[length];

id.Read(data, 0, (int)length);

string body = System.Text.Encoding.Default.GetString(data);

var p = body.Split('&')

.Select(s => s.Split('='))

.ToDictionary(k => k.ElementAt(0), v => v.ElementAt(1));

if (p["username"] == "volkan")

return "awesome!";

else

return "meh!";

};

This is nice but it’s a lot of work to read the whole and manually deserialize it! Fortunately Nancy supports model binding. First we need to add the using statement as the Bind extension method lives in Nancy.ModelBinding

using Nancy.ModelBinding;

Now we can simplify the code by the help of model binding:

Post["/"] = _ =>

{

var request = this.Bind<SimpleRequest>();

return request.username;

};

The important thing to note is to send the data with the appropriate content type. For the form data example the request should be like this:

POST http://localhost:12345/ HTTP/1.1

User-Agent: Fiddler

Host: localhost:12345

Content-Length: 29

Content-Type: application/x-www-form-urlencoded

username=volkan&isAdmin=sure!

It also works for binding JSON to the same POCO.

Preparing responses

Nancy is very flexible in terms of responses. As shown in the above examples you can return a string

Post["/"] = _ =>

{

return "This is a valid response";

};

which would yield this HTTP message on the wire:

HTTP/1.1 200 OK

Content-Type: text/html

Server: Microsoft-HTTPAPI/2.0

Date: Tue, 10 Nov 2015 15:48:12 GMT

Content-Length: 20

This is a valid response

Response code is set to 200 - OK automatically and the text is sent in the response body.

We can just set the code and return a response with a simple one-liner:

which would produce:

HTTP/1.1 405 Method Not Allowed

Content-Type: text/html

Server: Microsoft-HTTPAPI/2.0

Date: Tue, 10 Nov 2015 15:51:36 GMT

Content-Length: 0

To prepare more complex responses with headers and everything we can construct a new Response object like this:

Post["/"] = _ =>

{

string jsonString = "{ username: \"admin\", password: \"just kidding\" }";

byte[] jsonBytes = Encoding.UTF8.GetBytes(jsonString);

return new Response()

{

StatusCode = HttpStatusCode.OK,

ContentType = "application/json",

ReasonPhrase = "Because why not!",

Headers = new Dictionary<string, string>()

{

{ "Content-Type", "application/json" },

{ "X-Custom-Header", "Sup?" }

},

Contents = c => c.Write(jsonBytes, 0, jsonBytes.Length)

};

};

and we would get this at the other end of the line:

HTTP/1.1 200 Because why not!

Content-Type: application/json

Server: Microsoft-HTTPAPI/2.0

X-Custom-Header: Sup?

Date: Tue, 10 Nov 2015 16:09:19 GMT

Content-Length: 47

{ username: "admin", password: "just kidding" }

Response also comes with a lot of useful methods like AsJson, AsXml and AsRedirect. For example we could simplify returning a JSON response like this:

Post["/"] = _ =>

{

return Response.AsJson<SimpleResponse>(

new SimpleResponse()

{

Status = "A-OK!", ErrorCode = 1, Description = "All systems are go!"

});

};

and the result would contain the appropriate header and status code:

HTTP/1.1 200 OK

Content-Type: application/json; charset=utf-8

Server: Microsoft-HTTPAPI/2.0

Date: Tue, 10 Nov 2015 16:19:18 GMT

Content-Length: 68

{"status":"A-OK!","errorCode":1,"description":"All systems are go!"}

One extension I like is the AsRedirect method. The following example would return Google search results for a given parameter:

Get["/search"] = parameters =>

{

string s = this.Request.Query["q"];

return Response.AsRedirect($"http://www.google.com/search?q={s}");

};

HTTPS

What if we needed to support HTTPS for our tests for some reason? Fear not, Nancy covers that too. By default, if we just try to use HTTPS by changing the protocol we would get this exception:

The connection to ‘localhost’ failed.

System.Security.SecurityException Failed to negotiate HTTPS connection with server.fiddler.network.https HTTPS handshake to localhost (for #2) failed. System.IO.IOException Unable to read data from the transport connection: An existing connection was forcibly closed by the remote host.

The solution is to add create a self-signed certificate and add it using netsh http add command. Here’s the step-by-step process:

- Create a self-signed certificate: Open a Visual Studio command prompt and enter the following command:

You can provide more properties so that it would look with a name that makes sense. Here’s an MSD page to

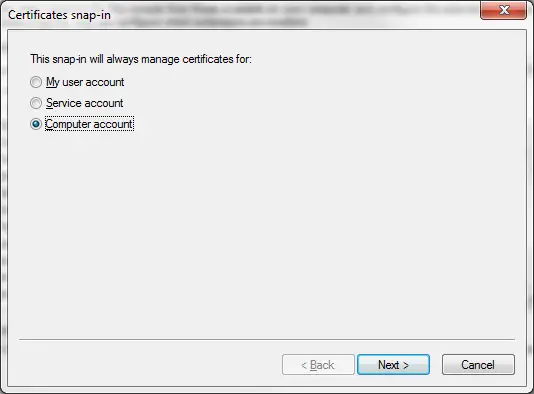

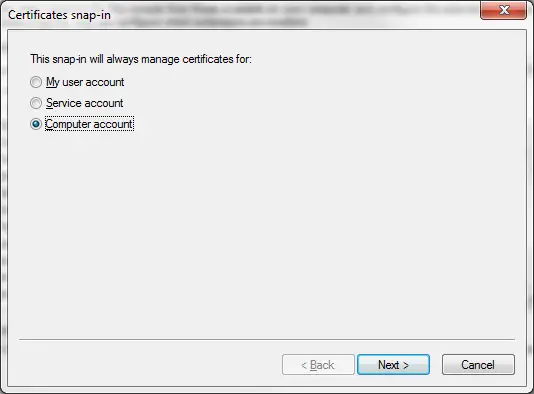

- Run mmc and add Certificates snap-in. Make sure to select Computer Account.

I selected My User Account at first and it gave the following error:

SSL Certificate add failed, Error: 1312 A specified logon session does not exist. It may already have been terminated.

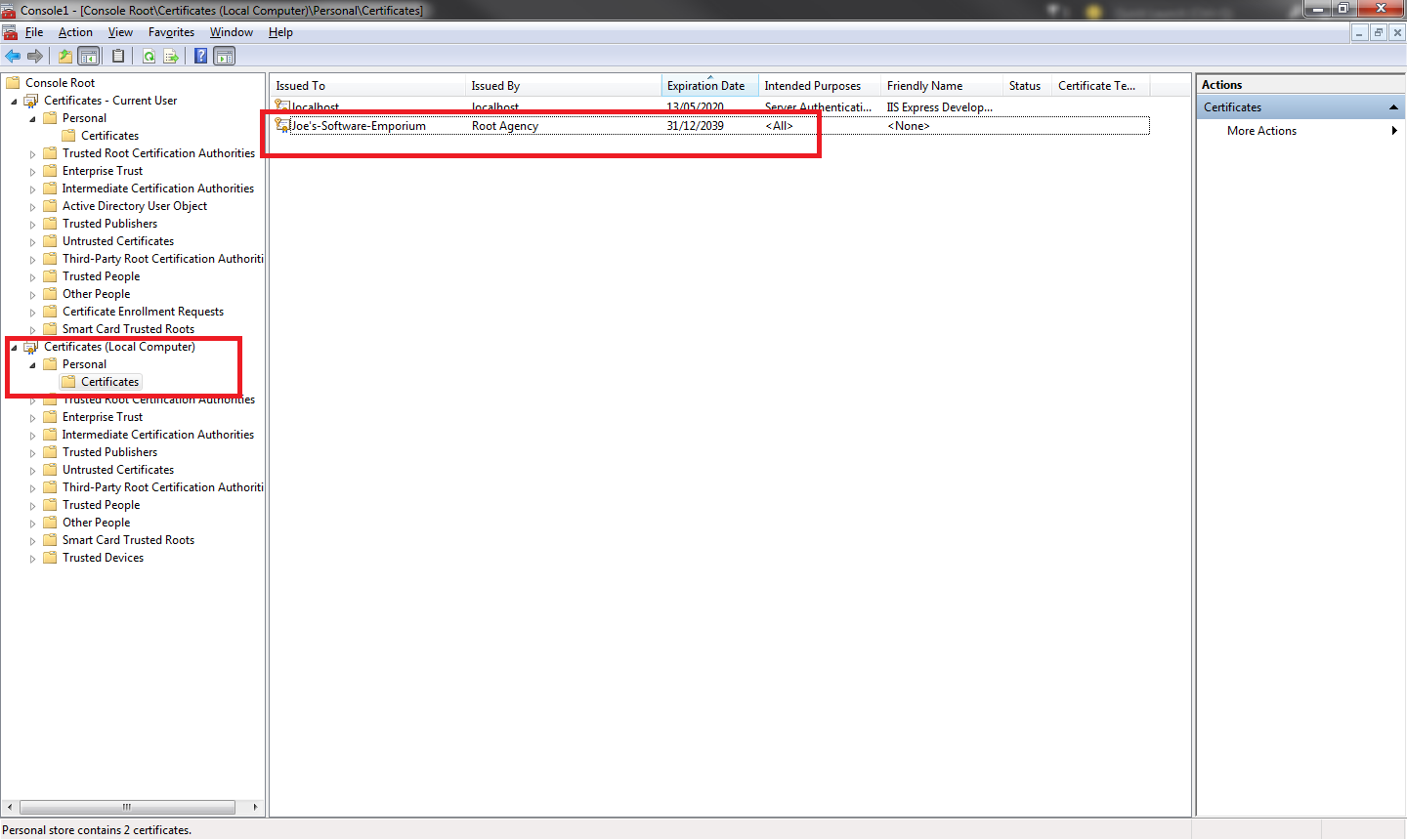

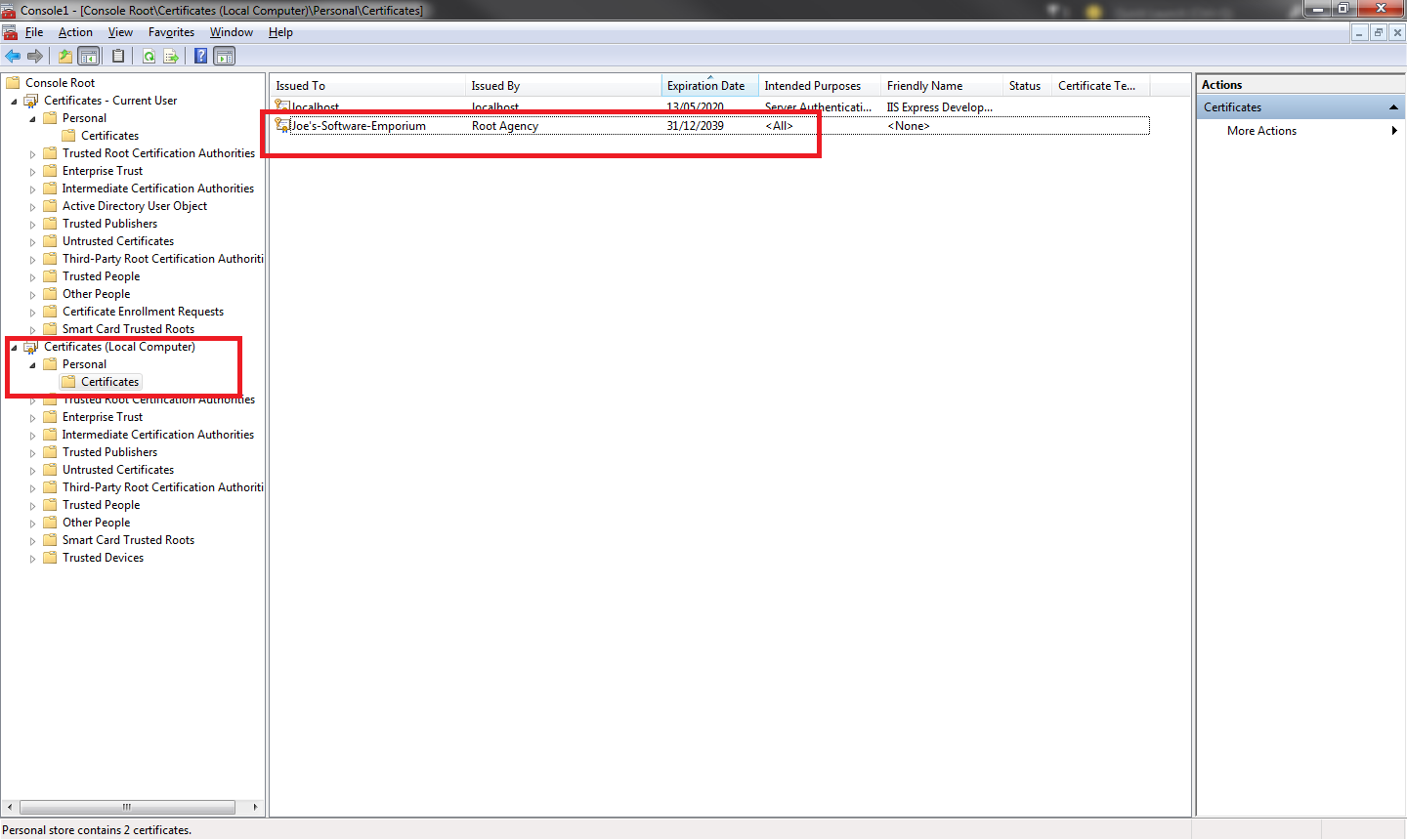

In that case the solution is just to drag and drop the certificate to the computer account as shown below:

-

Right-click on Certificates (Local Computer) -> Personal -> Certificates and select All tasks -> Import and browse to nancy.cer file created in Step 1

-

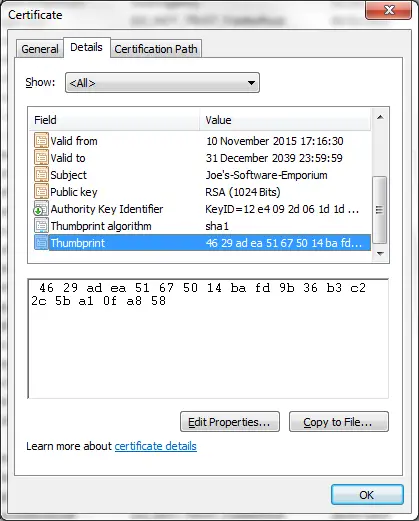

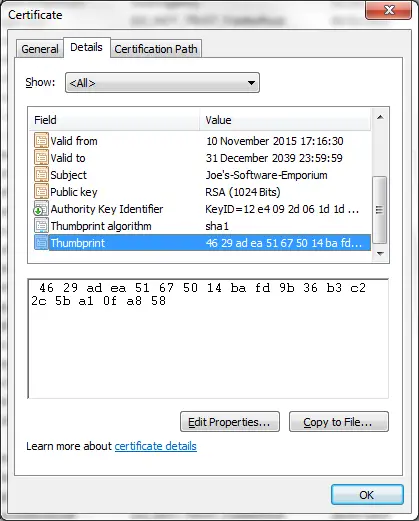

Double-click on the certificate, switch to Details tab and scroll to the bottom and copy the Thumbprint value (and remove the spaces after copied it)

- Now enter the following commands. The first one is the same as before, just with HTTPS as protocol. The second command add the certificate we’ve just created.

netsh http add urlacl url=https://+:12345/ user="Everyone"

netsh http add sslcert ipport=0.0.0.0:1234 ccerthash=653a1c60d4daaae00b2a103f242eac965ca21bec appid={A0DEC7A4-CF28-42FD-9B85-AFFDDD4FDD0F} clientcertnegotiation=enable

Here appid can be any GUID.

Let’s take it out for a test drive:

Get["/"] = parameters =>

{

return "Response over HTTPS! Weeee!";

};

This request

GET https://localhost:12345 HTTP/1.1

Host: localhost:12345

returns this response

HTTP/1.1 200 OK

Content-Type: text/html

Server: Microsoft-HTTPAPI/2.0

Date: Wed, 11 Nov 2015 10:24:58 GMT

Content-Length: 27

Response over HTTPS! Weeee!

Conclusion

There are a few alternatives when you need a small web server to test something locally. Nancy is one of them. It’s easy to configure, use and it’s lightweight. Apparently you can even host in on a Raspberry Pi!

Resources