Auto-Scaling using AWS Management Console

Auto-scaling has always been a feature of Amazon Web Services (AWS). Until today, it could be done in 2 ways:

- Using command line tool (See resources section for the link)

- Using Elastic BeanStalk to deploy your application

Yesterday (10/12/2013) they announced they added Auto-Scaling support to AWS console. I was planning to create auto-scaling my blog anyway so I cannot think of a better time to apply this.

Auto-scaling using AWS Management Console

Step 01: Launch Configuration

First we tell AWS what we want to launch. This step is a lot like creating a new EC2 instance. First you select an AMI. So before I started I created an AMI of my current blog and selected that one for the launch configuration. Then we select the instance properties. In this wizard we have the option for using spot instances. They are not suitable for Internet-facing applications so I’ll skip that part.

Step 02: Auto Scaling Group

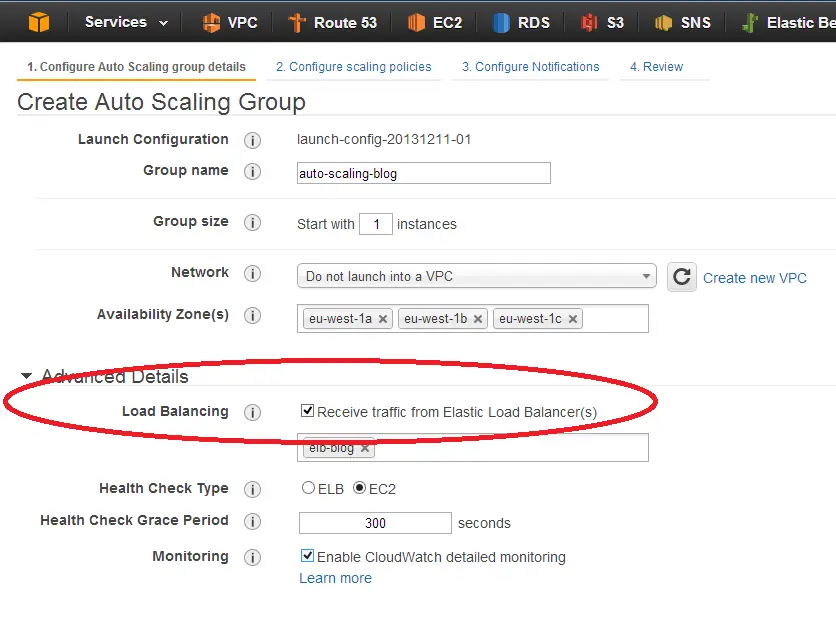

At the end of Launch Configuration wizard we can select create auto-scaling group with that launch configuration and jump right into Step 2. First we specify the name and the initial instance count for the group. Also we need to choose at least 1 availability zone. I always select all of them, I’m not sure if there is any trade-off with narrowing down your selection.

An important point to pay attention here is to expand Advanced Details section because it contains the load balancer selection. For web applications auto-scaling makes sense when the instances are behind a load-balancer. Otherwise new instances could not be reached anyway. Once you create the auto-scaling group you cannot associate it with an ELB so make sure you select your load balancer at this step.

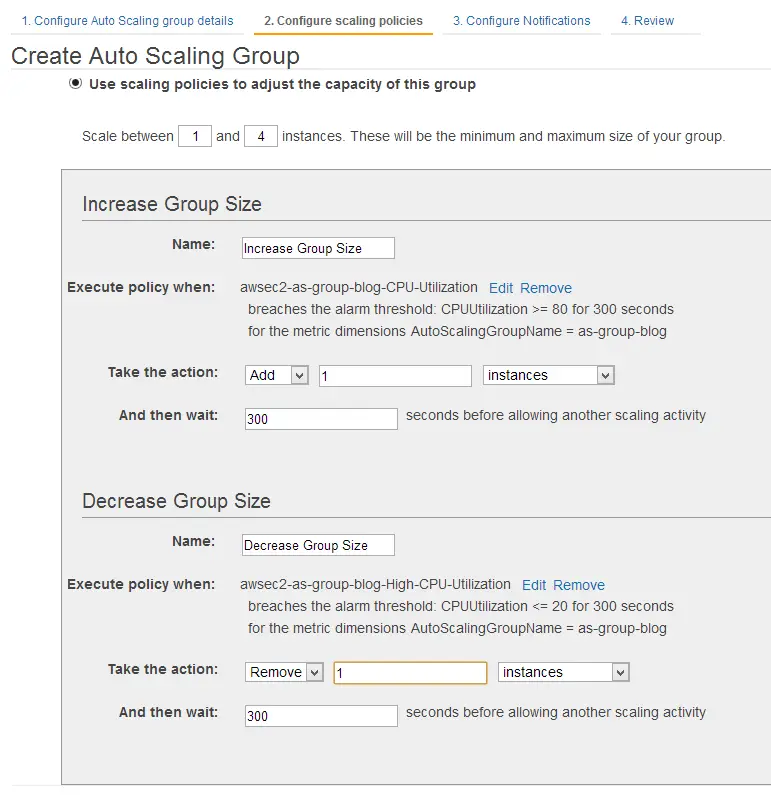

After comes another important step: Specifying scaling policies. Basically, telling AWS the action to take when it needs to scale up or down and when to do it. “When” is defined by CloudWatch alarms. For scaling up, I added an alarm for average CPU utilization over 80% for 5 minutes and for scaling up CPU utilization under 20% for 5 minutes. When high CPU alarm goes off it will take the action we select, which in my case is adding 1 more instance. And scaling down is just the opposite: remove 1 instance from the existing machine farm.

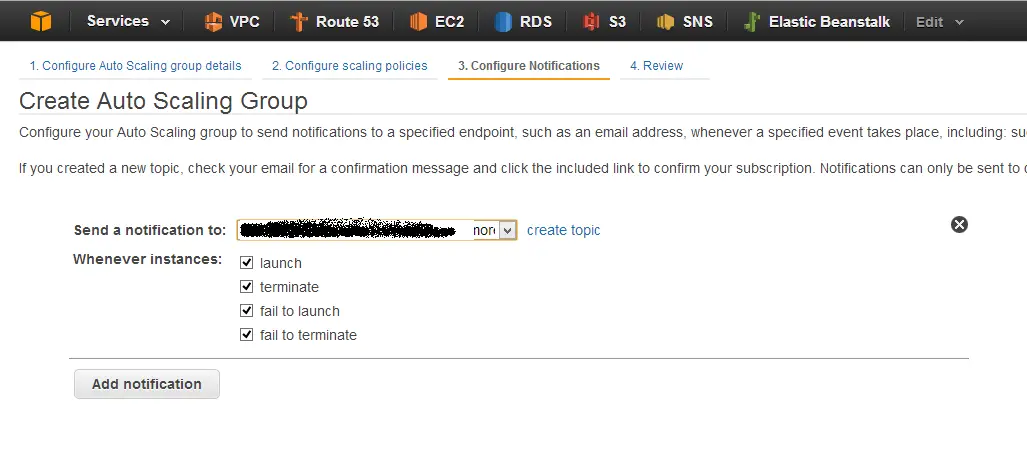

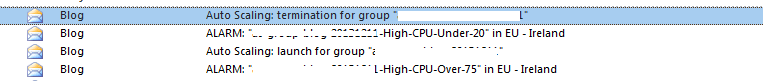

On next step we define the notifications we want to receive when an AS event is triggered. I would definitely would like to know everything that happens to my machines so I requested an email for all events.

That’s all it takes to create an AS group using the wizard.

Testing the scaling

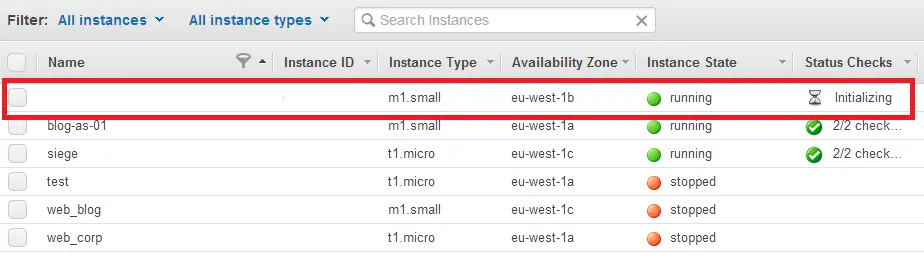

The easiest way to test auto-scaling group is to terminate the instance it just launched. As you can see below once I killed the instance it immediately launched another one to match the minimum number requirement of AS group. So auto-scaling group is working but how can I be sure that it will launch a new instance when I need it most. Time to make it sweat a little! But first we have to setup an environment to create load on the system:

Installing Siege

The easiest and simplest load testing tool I know is a Linux-based one called Siege. To prepare my simple load testing environment I quickly downloaded siege:

wget http://www.joedog.org/pub/siege/siege-latest.tar.gz

tar -xzvf siege-latest.tar.gz

It requires a C compiler which doesn’t come out-of-the-box with an Amazon Linux AMI. So first we need to install that:

yum install gcc*

And configure it by

./configure

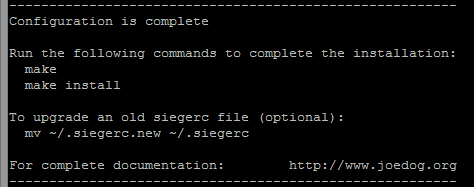

At the end of the configuration it instructs us to run the following commands:

So after running make Siege is ready to go. We can check the configuration by

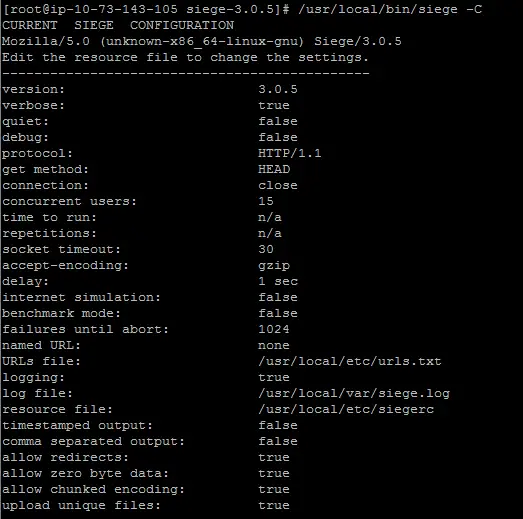

/usr/local/bin/siege -C

It should display the current version and other details about the tool.

Ready to go

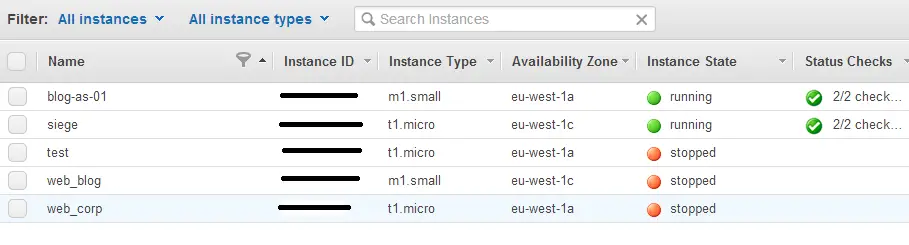

Now, we have a micro instance running Siege and a small instance launched by auto-scaling.

The auto-scaling is supposed to launch another instance and add it to load balancer if the CPU usage is too high on the existing one. Let’s see if it’s really working.

Under Siege!

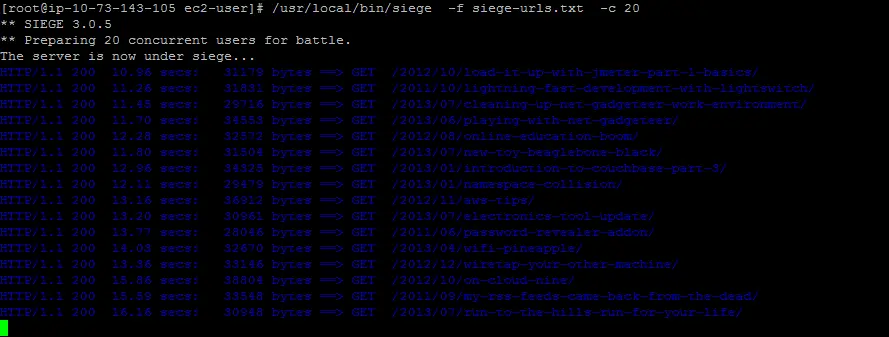

I first created a URL file from my sitemap so that the load can be more realistic. I fired up 20 threads and it started to bombard my site:

When I try to load my site it was incredibly slow. The CPU usage kept rising on the single instance until the CloudWatch alarm went off. It triggered auto-scale to launch a new instance.

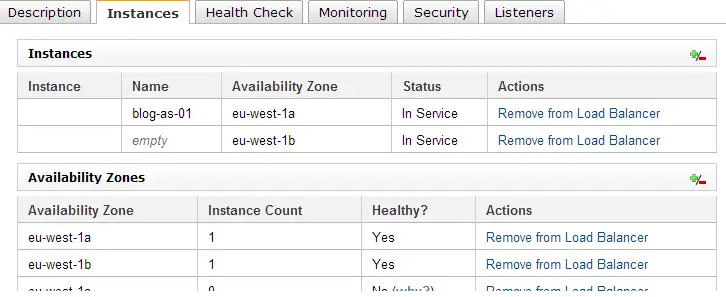

Now, I had 2 instances to share the load but that could only happen if the new instance was added to the Elastic Load Balancer (ELB) automatically. After a few minutes it passed the health checks and went in service.

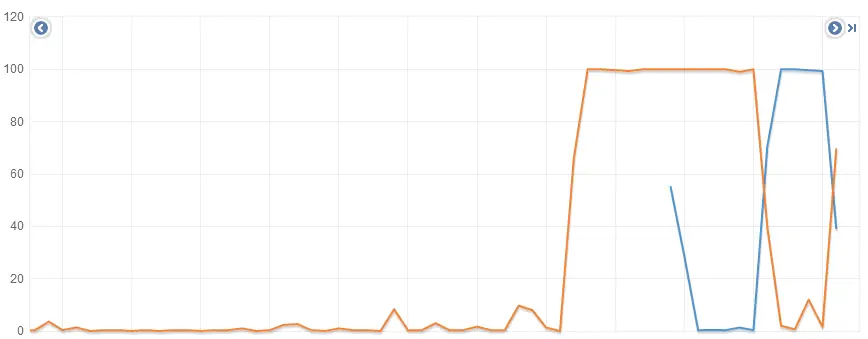

At this point I had 2 instances and when I tried to load posts from my blog I noticed it was quite fast again. The CPU usage graph below tells how it all went down:

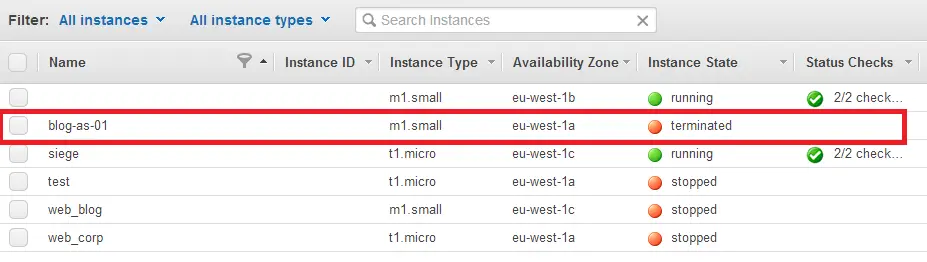

My first instance (orange) was running silently and peacefully until it was attacked by Siege. After a few minutes of hard times the cavalry came to rescue (blue instance) and started getting its fair share of the load. Then ELB distributed load as evenly as possible making the system running smoothly again. OK, so the system can withstand a spike and scale itself but it costs money. What’s going to happen after the storm. So I stopped Siege and sure enough, as we’d expect, after a few minutes Low CPU alarm kicked off and set the instance count back to 1 by terminating one of the instances.

Also, I was notified in every step of this process. So that I could be able to keep track of my instances at all times.

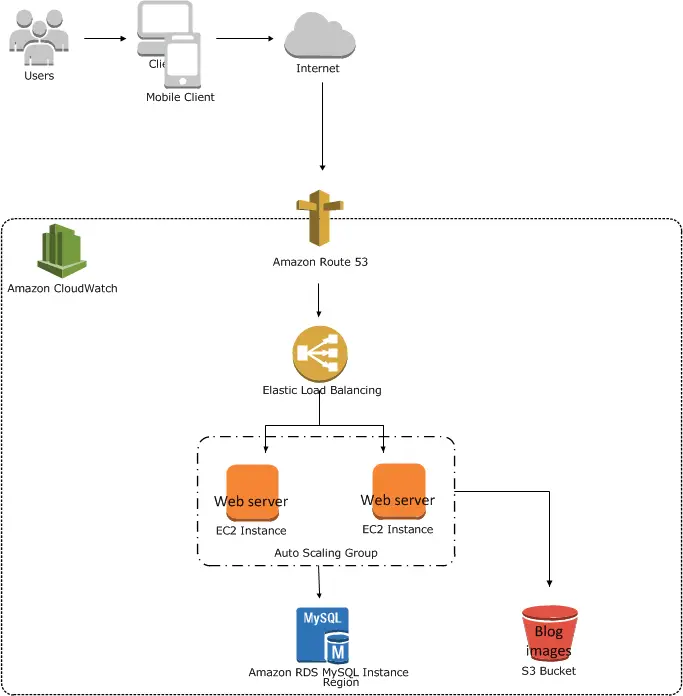

Architecture of the system

So at this point the architecture of the system looks like this:

I’m planning to cover some basics (EC2, RDS, S3) in more detail in a later post. Also I’ll try to add more AWS services and enhance this architecture as I go along.

Final Words

- If you are planning to use auto-scaling in production environment make sure to backup all your stuff externally. Also create snapshots for all the volumes.

- Even though network traffic is cheap it still costs. So for extended tests I suggest you keep an eye on your billing statement

- In Amazon Linux AMI Apache and MySQL don’t start automatically so you may need to update your configuration like I did. I used the script I found here.